![]() Home Page

Home Page

![]() Company

Company

![]() Clients

Clients

![]() Reports

Reports

![]() CallWeb

CallWeb

![]() SampleCalc

SampleCalc

![]() EPlanCalc

EPlanCalc

![]() French

French

‘Data’ is not the plural of ‘anecdote’

(Julian Baggini)

Social Impact Analyst Association 2014 Talking Data: Measurement with a Message Conference. Speaking notes for Benoît Gauthier, President of the Canadian Evaluation Society, on November 3, 2014.

Thank you for inviting me today. I am glad to represent the Canadian Evaluation Society and its 1,800 members. I hope to offer a small contribution to your reflections based on my experience as an evaluator and on the collective experiences and lessons learned by evaluators over the last several decades.

The focus of this conference is on "measurements that deliver the right message". The conference program cites examples like writing for funders, communicating to internal decision makers, and telling stories to stakeholders. Ultimately, this conference's theme reflects a concern with bringing information to life, and making it usable and used.

Utilization is a long-standing preoccupation of evaluators – the community that I represent here today. The seminal work by Michael Quinn Patton dates back to 1978, almost 40 years ago. "Utilization-Focussed Evaluation" was the title of his book [1] which has seen four editions plus a shorter version published in 2012 [2]. This topic is alive and well in evaluation. The expression "Utilization-Focussed Evaluation" returns about 400,000 results on Google, not to mention more than 6,000 scholarly references to Patton's work.

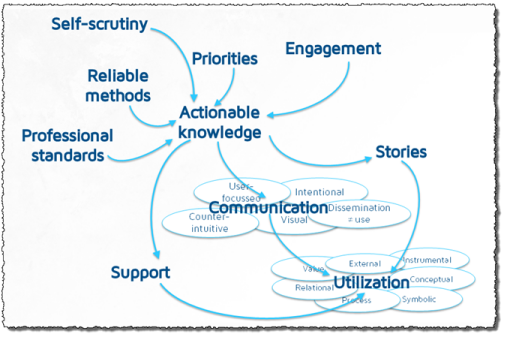

Four decades of research on evaluation focussed on utilization have led to a number of firm observations that I will attempt to summarize now.

First, for evaluation, or more generally for knowledge, to be used in decision-making, the organization must be ready to accept the challenge of questioning itself. There has to be permeability to new ideas and data. An organization founded on ideology and unwilling to put its bases to the test will simply not use performance information contradicting its foundations.

Second, actionable knowledge is a derivative product: it is produced by a process of engagement which co-opts staff and managers within the evaluation process. Evaluation conducted in a totally arms-length manner without input from the organization itself is much less likely to get used – unless the findings happen to correspond with the interests or expectations of a particularly influential group. Ideally, engagement is found at every step of the way: in defining the approach, in identifying methodologies and yardsticks, in designing the logic of the demonstration, in interpreting the observations, in drawing conclusions.

Third, the universe of possible evaluation issues or social outcomes is always much larger than what is allowed by the practical limitations imposed on any feedback process (money, time, expertise, etc.). Therefore, it is crucial to identify the priority purposes for the feedback – and those are the concerns that staff, management and other stakeholders rank highest. Here again, the priorities for knowledge development must be identified through education and engagement.

Fourth, scattered collections of facts and figures are unlikely to get much use. Knowledge must be organized into stories that make sense to stakeholders, and that respect the complexity of the world in which the organization evolves. It is so easy for those at whom the knowledge is aimed to disregard a counter-intuitive finding with a particular case that pretends to lead to the contrary conclusion. To organize their work and to tell their stories, evaluators have used logic models, program theories, systemic maps, and other such tools which all aim to simplify the world – but avoid the science of particular cases – while allowing for important real-life interactions to be portrayed in their conclusions.

Fifth, communication to and with decision-makers is clearly a preponderant factor in utilization of knowledge. There are several facets to communication. Timing is essential: communication of results must start early and be staged over time. Method is pivotal: if the final report is still a preferred vehicle, other methods are gaining ground such as thematic summaries, newsletter articles, brochures, press releases, oral briefings, discussion groups, retreats, storyboarding sessions, creative presentations, debates, etc. Adapting the content is also key: some audiences couldn't care less about measurement methods and want to focus on lessons learned while others need to be convinced that the measurement is reliable before paying attention to the results.

Patton identified five principles of utilization-focussed reporting:

Sixth, utilization must be facilitated with after-sale service or support: decision-makers may well use carefully crafted new knowledge produced via close engagement, but they may also need to be reminded of the implications of this knowledge in the conduct of their business. The evaluator or the social impact analyst does not own the knowledge but they have profoundly contributed to developing it; because of that, he is in a pivotal position to help apply the knowledge to various situations in the organization.

This last utilization factor posits a position of influence for the analyst. This stance can conflict with the typical level of independence we want adopt. So how does one reconcile independence, influence, and responsiveness to organizational needs? Rakesh Mohan and Kathleen Sullivan [3] have proposed that these concepts are not mutually exclusive and that strategies are available to maximize independence and responsiveness. These strategies include:

The last of these strategies is quite important. Evaluators as well as social impact analysts are open to criticism if they don't adhere to a clear set of well established professional standards. It has taken evaluators decades to develop standards that are now officially accepted in North America, Europe, and Africa [4]. Essential elements of these standards relate to stakeholder engagement, independence, credibility of evidence, protection of the confidentiality of certain information, quality assurance, balance in analysis and reporting, and record keeping. Such standards constitute one of the three pillars of the Canadian Evaluation Society Professional Credentialing program, along with ethics guidelines and professional competencies.

So, what does that mean in relation to the theme of this conference? The presentation of data is a fundamental ingredient in the recipe for influencing decision-making and knowledge utilization. However, it is only one of several: I would contend that great presentation of poorly targeted evidence is less efficacious than poor presentation of well chosen evidence. But I wholeheartedly agree that optimal utilization of evidence occurs when all the utilization drivers are aligned: openness, engagement, priorization, storytelling, communication, and influence.

I want to leave you with one final thought. Utilization comes in a number of forms. Kusters and her associates identified seven types of uses [5]. They are:

In situating how to best impact decision-making, it is important to remember that utilization comes in a multitude of forms. Also, management science tells us that decision makers are not looking for certainty; they strive for a reduction of uncertainty. Managers also need information that is credible in their eyes and actionable in their lives.

Thank you for giving me this opportunity to talk to you today about how evaluators see the use of the knowledge they generate. I hope we get an opportunity to discuss how this applies to the world of social impact analysis.

SOURCES

[1] Patton, Michael Quinn (1978) Utilization-Focussed Evaluation, first edition, Sage Publications

[2] Patton, Michael Quinn (2012) Essentials of Utilization-Focussed Evaluation, Sage Publications

[3] Mohan, Rakesh and Kathleen Sullivan (2006) "Managing the Politics of Evaluation to Achieve Impact" in New Directions for Evaluation, no. 112

[4] Yarbrough, D. B., Shulha, L. M., Hopson, R. K., & Caruthers, F. A. (2011). The program evaluation standards: A guide for evaluators and evaluation users (3rd ed.), Sage Publications

[5] Kusters, Cecile et al (2011), Making Evaluations Matter: A Practical Guide for Evaluators, Centre for Development Innovation, Wageningen University & Research Centre, Wageningen, The Netherlands

To reach us:

General address : service@circum.com

Benoît Gauthier : gauthier@circum.com, @BGauthierCEEQ

Tel. : +1 819 775-2620, Fax : (no more fax; sorry)

238 Fleming Road, Cantley, Québec J8V 3B4